As a global leader and innovator in cloud, messaging, and digital platforms and products, we, at Synchronoss, are proud to announce our decision to use Kubernetes as the container orchestration platform for automating our deployments and scaling our platform. We believe that the adoption of Kubernetes, as part of our deployment strategy, will greatly enhance our ability to deliver for our customers at scale, including speeding up the time for onboarding and reducing the chance for our platform to be deployed incorrectly.

What Is Kubernetes?

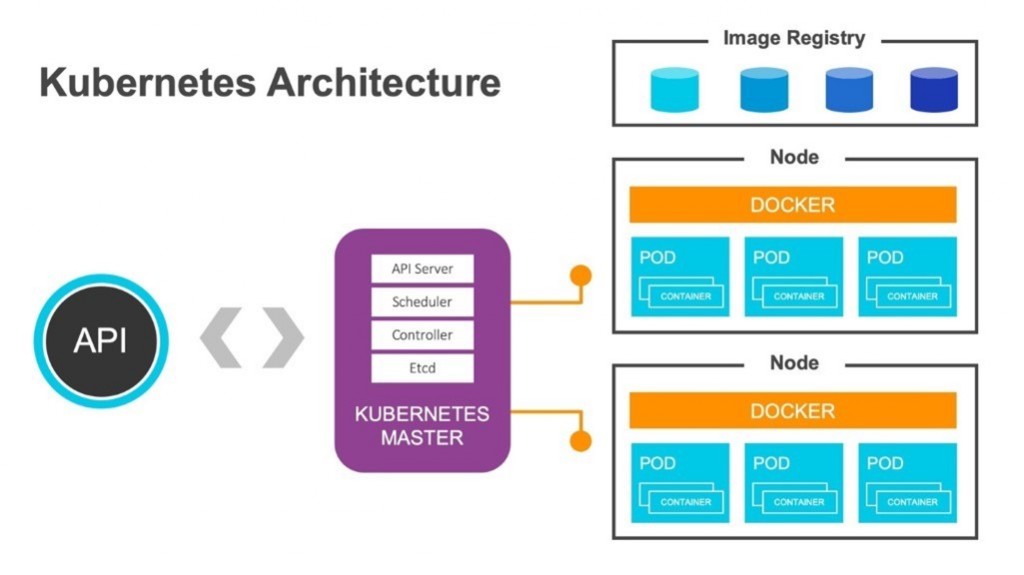

Meaning “helmsman” or “pilot” in ancient Greek, Kubernetes is increasingly recognized as the name of an open-source container orchestration system that enables the deployment and management of containerized applications at scale. Container orchestration is used to automate the deployment, management, scaling, networking and availability of individual containers for applications based on microservices.

Why We Chose Kubernetes

Because of its high degree of security, reliability and scalability, many Fortune 500 companies are choosing Kubernetes for running their most mission-critical applications. After evaluating a variety of container orchestration systems, Kubernetes was the obvious choice for Synchronoss. It has become a dominant leader in the container orchestration space over the last several years and is being leveraged by a growing number of enterprises because of its flexibility, velocity and reduced cost. This, in conjunction with the support of the large Kubernetes community, made it the logical next step for the future of our deployments.

Kubernetes is now powering our Personal Cloud and DXP product offerings. And, we’ll be deploying the remainder of our products on the extensible, open-source platform in the coming months. And our decision has already begun delivering measurable impact. Previously, adding new server capacity could take hours, if not days. With Kubernetes, we can vertically and horizontally scale our platform in the areas that need it the most – quickly and with very little overhead.

The Advantages of Kubernetes

• Horizontal scaling of resources

Containers can be manually or automatically scaled as needed using the built-in horizontal pod auto-scaler in Kubernetes. Additionally, thanks to Kubernetes, new infrastructure easily can be added or removed to adjust overall capacity of the cluster.

• Infrastructure as code

All our infrastructure, from the deployments and services for the application to the load balancers, are maintained under source code control. This provides a mechanism to reliably deploy our software in a repeatable fashion with a very high level of automation.

• Self-healing services

The Kubernetes “desired state” principle ensures that the overall state of the application, running in the cluster, is as defined in our manifests under source code control. Failed containers are deleted or replaced based on this configuration to provide optimal uptime.

• Zero-downtime deployments and hassle-free rollbacks

Various deployment strategies, provided by the orchestrator, give us the ability to deploy new versions of our products in a safe manner without incurring downtime. The strategies also provide the ability to rollback previous versions if needed with a single command. This means that a deployment can occur while the system is running – with no downtime! The application will continue to service requests while the new version of the software is being deployed. This provides enormous benefits in meeting our uptime service level agreements.

• Canary deployments

Used as a pattern for rolling out releases to a subset of users or servers, canary deployments reduce risk involved with releasing new software versions. With canary deployments, we can deploy a new change (or a feature or release) incrementally to a small subset of containers. This subset of containers runs in parallel with the containers running the current version. Once testing is complete and verified, we can roll out the new version to the entire cluster.

• Service discovery and load balancing

Service discovery allows containers to be grouped and fronted. As new containers are created, they are automatically added to a group and can immediately start handling requests. Load balancing is handled by the Kubernetes ingress controllers which will direct traffic to the existing containers.

• Service mesh add-ons

The community has several service mesh solutions available today which provide a rich set of security and traffic controls solutions.

What the Deployment of Kubernetes Means for Our Customers

We believe that the adoption of Kubernetes, as part of our deployment strategy, will greatly enhance our ability to deliver for our customers at scale. Onboarding can happen within days, instead of months. And because all deployments are identical, there is a very low chance for our platform to be deployed incorrectly. We can also roll out new versions of our software and even “rollback” a version with ease if there ever is a problem – without the need for downtime. This enables us to honor our service level agreements by exposing customers to the least amount of service downtime as possible.